What are Large Language Models? – LLM AI Explained

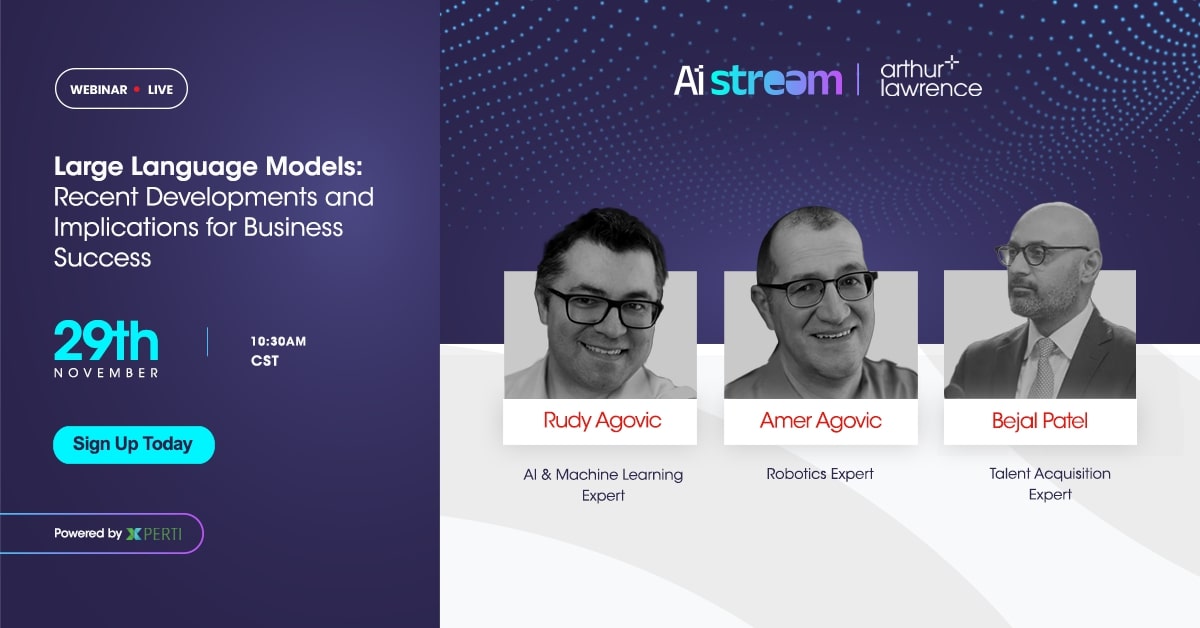

Gear up, techies! Xperti is bringing you a very exciting webinar on Large Language Models, their recent developments, and implications on business success. Before catapulting straight into the webinar, we want to make sure that you are well-equipped with the relevant knowledge. So, give this article a read to know what LLM is, and take notes of all the interesting thoughts you might get along the way to ask our webinar speakers, Rudy and Amer Agovic.

First things first. Let’s know the speakers. So, who are Rudy and Amer Agovic? Rudy and Amer Agovic are brothers who are experts in AI and machine learning. They are the co-founders of Reliancy, a company that helps businesses harness the power of data. Rudy is also the chief analytics officer of Clarity Data Insights, another company that provides sales enablement and AI development solutions. They both have PhDs from the University of Minnesota and have published at top-tier conferences in their fields.

Who can better debunk the ins and outs of AI and machine learning than these two? That being said, what are they going to discuss in the webinar? But what is LLM? Let’s get into it.

Large Language Models

LLM or Large Language Model uses neural networks with billions of parameters to process and understand natural language using self-supervised learning. LLMs can generate relevant and diverse suggestions for user queries based on the embeddings of the product or content text.

Some of the key concepts and techniques that enable LLMs are:

Transformers: an architecture that uses attention mechanisms to focus on the most important parts of the input and output sequences, solving the memory issues of earlier models.

Self-attention: a method that allows the model to learn the relationships between different tokens in the input and output sequences, capturing the long-range dependencies and context information.

Pre-training and fine-tuning: a two-stage process that involves training the model on a large corpus of unlabeled data to learn general language features and then fine-tuning the model on a smaller dataset of labeled data to adapt to a specific task.

Decoding and output generation: a process that involves sampling or selecting the most likely tokens to form the output sequence, using different strategies such as greedy search, beam search, top-k sampling, etc.

Some of the recent developments and challenges in LLMs are:

Scaling up the model size and parameter count: Large Language Models have grown rapidly in size and complexity, reaching hundreds of billions of parameters, such as GPT-3, Turing-NLG, etc. However, this also increases the computational cost, energy consumption, and environmental impact of training and deploying LLMs.

Improving the model quality and diversity: Large Language Models have achieved impressive results on various natural language tasks, such as text generation, machine translation, summarization, etc. However, they still face issues such as repetition, inconsistency, bias, and toxicity in their outputs. Therefore, methods to improve the quality and diversity of LLMs are needed, such as data augmentation, regularization, evaluation metrics, etc.

Expanding the model scope and applicability: LLMs have been mainly developed and tested on English or other high-resource languages, limiting their applicability to other languages and domains. Therefore, methods to expand the scope and applicability of LLMs are needed, such as multilingual models, cross-lingual models, domain adaptation, etc.

What is LLM Search?

LLM search in large language models is a technique that uses the text generation capabilities of large language models (LLMs) to enhance the search experience for users. LLMs are neural networks with billions of parameters that can process and understand natural language using self-supervised learning. LLMs can generate relevant and diverse suggestions for user queries based on the embeddings of the product or content text. LLM search can be applied to various domains, such as product search, news search, academic search, etc.

What is Google LLM?

Google LLM is a type of machine learning model that can process and generate natural language at a large scale. They are trained on massive datasets of text from various sources, such as books, articles, websites, and social media posts. Google LLMs can perform a range of tasks, such as creating powerful digital assistants, generating better search results and product recommendations, enforcing smarter platform curation and safety features, and much more. Some examples of Google LLMs are BERT, PaLM 2, and LaMDA.

We have equipped you with all the information needed for this webinar. What are you waiting for? Sign up for free now!